If you use cloud computing services, there’s a good chance that you have interacted with Amazon Web Services. It’s the, well, Amazon of cloud computing. Since 2012, AWS has been identified by Netcraft as the largest hosting company in the world. According to the same survey, their nearest competitor, DigitalOcean, has fewer than half as many web-facing computers.

Back in the dark ages, EC2 instances were only insulated from the public Internet by their security groups, whose rules weren’t always reliably applied. There wasn’t a way to logically (or physically) isolate instances from the public cloud. Fear, uncertainty, and dismay ran amok. Smaller competitors began marketing the term “hybrid cloud.” Times were perilous.

Then, in 2009, AWS introduced a new feature, the Virtual Private Cloud, which allowed cloud infrastructure to be deployed in a virtual network. They wisely recommended that more complex deployments use a pattern which included both “public” and “private” subnets. In a public subnet, EC2 instances could send network traffic to the Internet through a virtual gateway, not unlike classic EC2. As long as they had a public IP address, they could also receive inbound traffic, provided that any local firewall rules and the assigned VPC security groups allowed it.

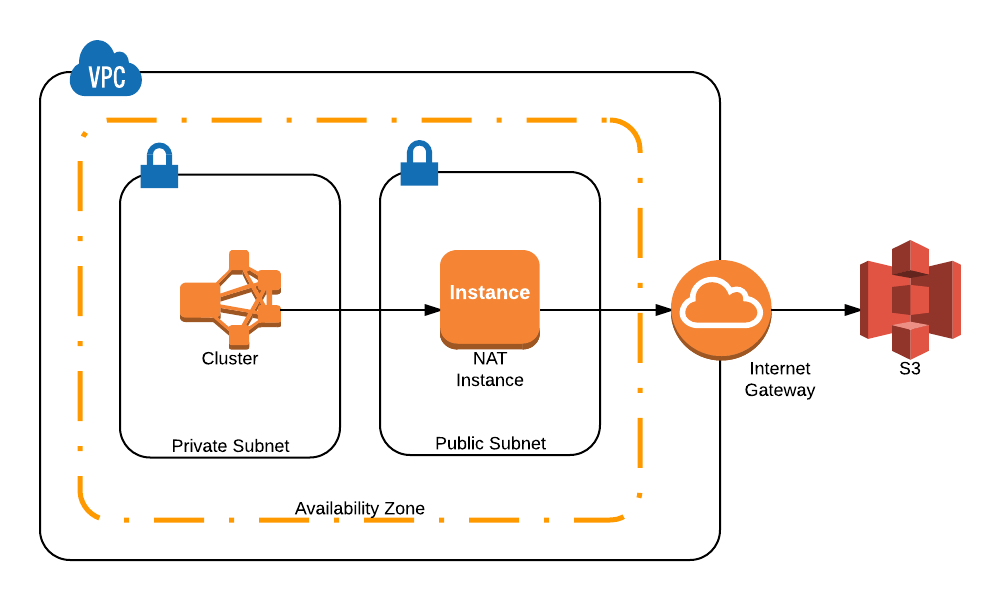

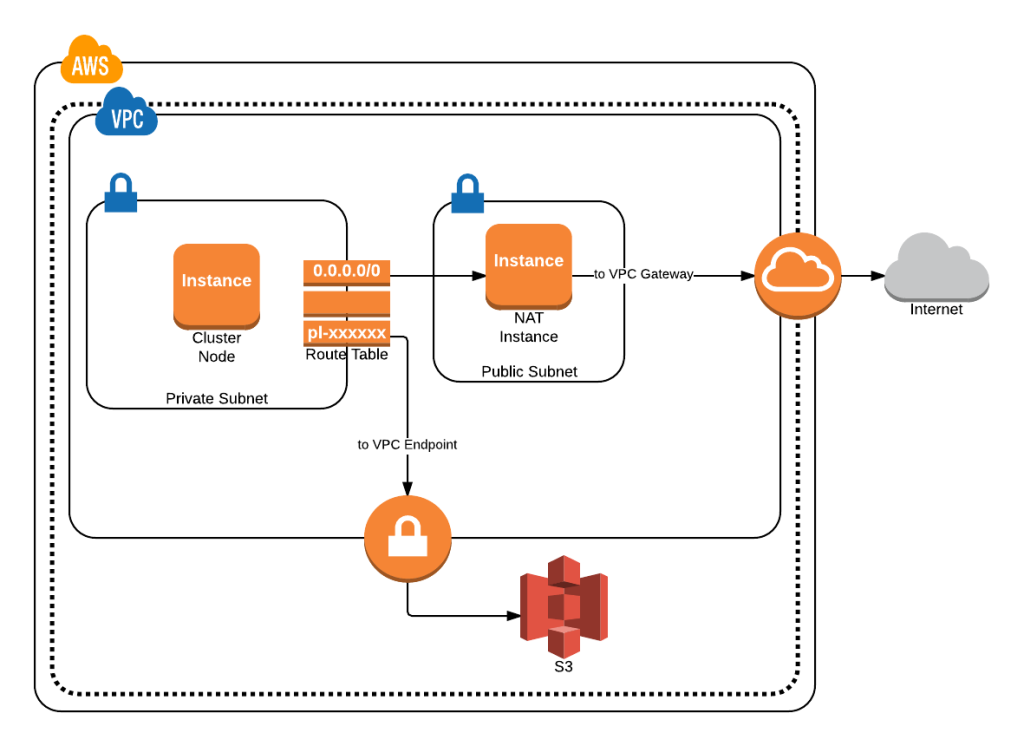

Private subnets were originally intended for backend services, such as database servers. Unless a NAT instance had been provisioned, EC2 instances in a private subnet were unable to reach the Internet. Unfortunately, it also meant that they couldn’t reach other AWS endpoints, such as S3. That had striking implications for anyone who desired the network isolation provided by a private subnet, but also required the ability to apply software updates or transfer data to designated ranges outside of the VPC. It was sometimes argued that those operations (which were usually backups) should simply be conducted by instances in the public subnet, which could communicate with the private subnet by virtue of their local network route.

That would have made perfect sense for anyone transferring a small amount of data. But, what if they were running a large, distributed database, like Cassandra or HBase? In that case, there were a few unsettlingly brittle options. They could launch a short-lived cluster of EC2 instances in a public subnet, which would “reach inside” the private subnet to extract data and perform the backup. Alternately, they could attempt to create snapshots of their data on EBS volumes, although that sometimes proved disruptive. Finally, they could just vertically scale a NAT instance as far as possible to minimize the bottleneck, and then accept the network degradation while transfer operations flowed through it.

NAT instances also came with security drawbacks. Although it was still possible to restrict egress traffic by enforcing outbound rules on the NAT instance, instances in the private subnet were now able to reach the Internet, which increased the risk of malicious software communicating with external hosts. In addition, the NAT instance became a single point of failure if it wasn’t part of an auto-scaling group, which meant additional automation was necessary to ensure that a dedicated ENI was attached to the running NAT instance.

When many instances attempted simultaneous S3 transfer operations, the single NAT instance was quickly overwhelmed, and host machines occasionally melted into a puddle of liquid metal.

Last month, AWS rolled out a new feature, VPC endpoints, without too much fanfare. Simply put, VPC endpoints allow instances within a private subnet to reach endpoints for other AWS products by adding internal network routes. Outside of a brief interruption as the new routes become active and the source IP addresses change, the required route table entries are seamlessly and invisibly managed. Most enticingly, it means that instances in a private subnet are able to reach S3 without having their traffic routed through a NAT.

Because S3 lends itself to distributed operations and provides an inexpensive, durable place to store large data sets, it has become a lynchpin for many groups that work with Big Data. And, because Hadoop includes native support for it, both MapReduce and Spark – the two most popular distributed computation frameworks – are able to use S3 as they would any other file system. In fact, AWS has deeply integrated the service with their own Elastic MapReduce product.

As it turns out, package repositories are available on S3 for both Amazon Linux and Ubuntu, meaning that a VPC endpoint could facilitate both system updates and distributed backup operations. When this new feature is combined with EBS volume encryption and physically isolated, dedicated host tenancy, AWS might become a lot more compelling to those with concerns about security and compliance.

Each instance within the cluster may now access S3 directly, without sending and receiving data through a NAT instance.

While writing this post, I decided to explore how VPC endpoints behave in practice. For the exercise, I configured a VPC with an EC2 instance located within a private subnet, as well as a NAT instance in the adjacent public subnet. Before configuring the VPC endpoint, the internal name server responded with a standard, publicly addressable endpoint for S3.

[ec2-user@ip-192-168-2-134 ~]$ nslookup s3-external-1.amazonaws.com Server: 192.168.0.2 Address: 192.168.0.2#53 Non-authoritative answer: Name: s3-external-1.amazonaws.com Address: 54.231.66.98

The route table associated with the subnet had been altered to include an entry that would send all outbound traffic through the ENI attached to the NAT instance.

[ec2-user@ip-192-168-2-134 ~]$ route Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface default 192.168.2.1 0.0.0.0 UG 0 0 0 eth0 169.254.169.254 * 255.255.255.255 UH 0 0 0 eth0 192.168.2.0 * 255.255.255.0 U 0 0 0 eth0

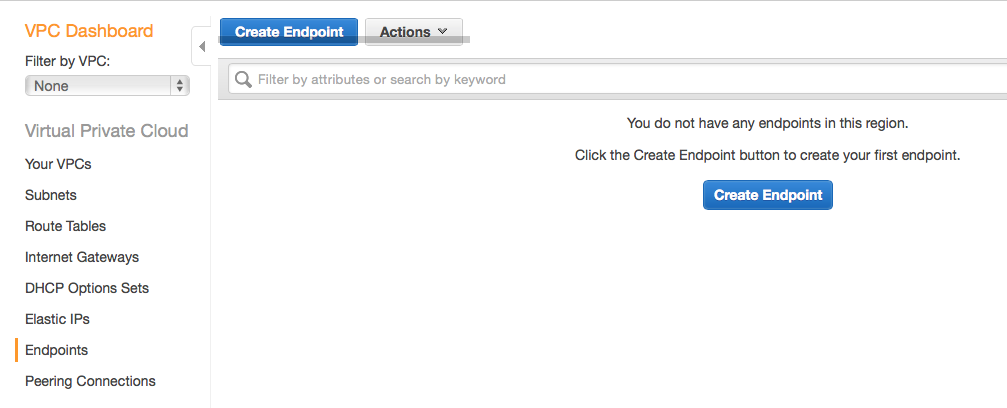

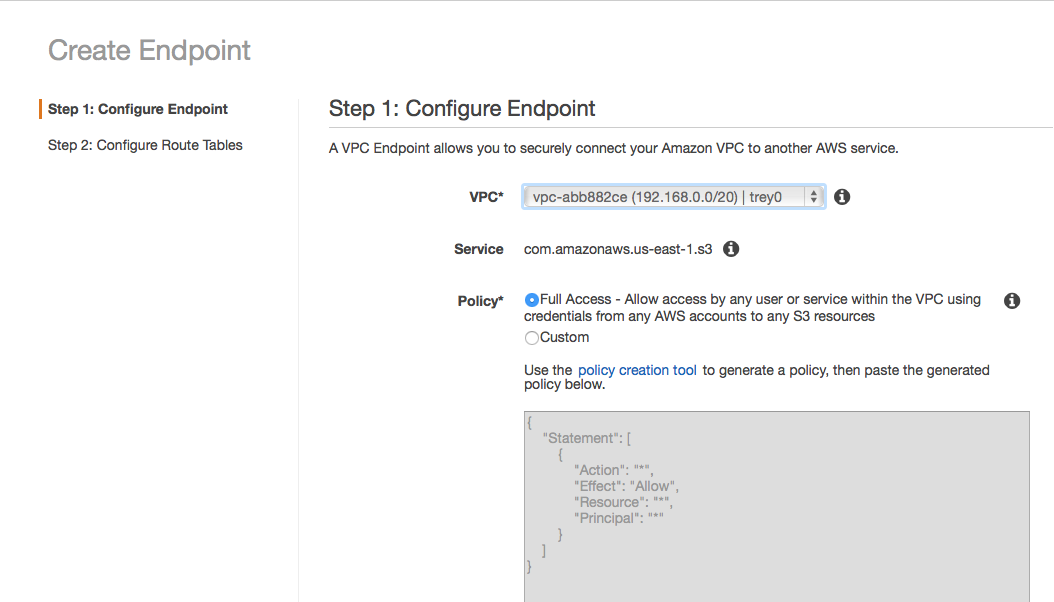

It was trivial to configure a VPC endpoint through the AWS web console. I simply chose the “Endpoints” link, and then the “Create Endpoint” button.

I selected my VPC, and then defined an endpoint policy. Admittedly, it was a bit lenient.

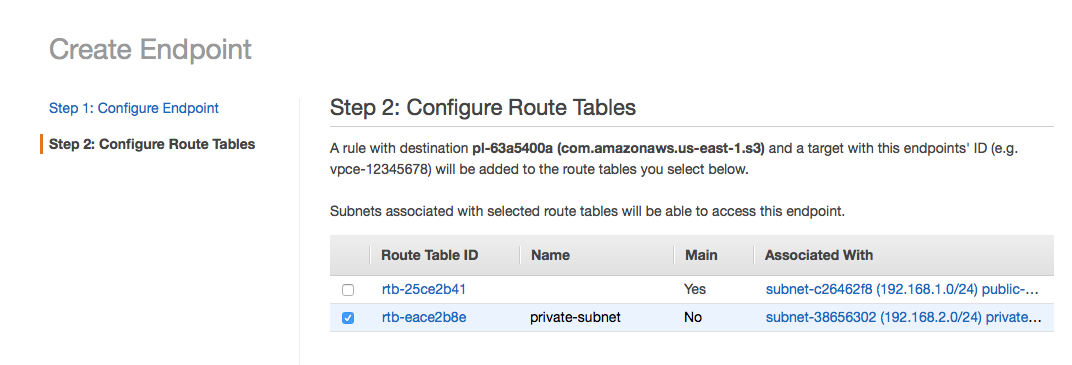

Finally, I picked the route tables that would be adjusted to suit the VPC endpoint.

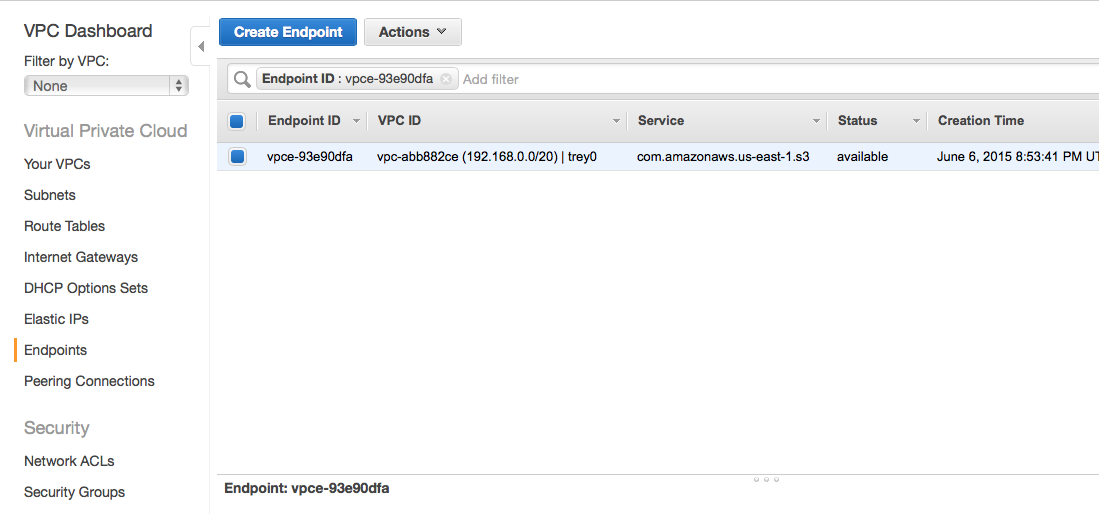

According to the console, I had an active VPC endpoint!

Once I removed the outbound NAT entry from the route table associated with the private subnet, I was no longer able to ping Google.

[ec2-user@ip-192-168-2-134 ~]$ ping -c4 google.com -w5 PING google.com (74.125.141.113) 56(84) bytes of data. --- google.com ping statistics --- 6 packets transmitted, 0 received, 100% packet loss, time 5000ms

I was, however, still able to install nmap, as the Amazon Linux yum repositories are conveniently hosted on S3. The service was still reachable using what appeared to be the public endpoint. Because I had already terminated the NAT instance and removed the entry from the route table, however, traffic destined for S3 was clearly being routed through the VPC endpoint.

[ec2-user@ip-192-168-2-134 ~]$ nslookup s3-1.amazonaws.com Server: 192.168.0.2 Address: 192.168.0.2#53 Non-authoritative answer: Name: s3-1.amazonaws.com Address: 54.231.0.32 [ec2-user@ip-192-168-2-134 ~]$ nc -z s3-1.amazonaws.com 443 Connection to s3-1.amazonaws.com 443 port [tcp/https] succeeded!

Unsurprisingly, all of this was completely invisible to the EC2 instance, just as it should be. The managed routes ensure that any traffic matching the prefix list – in this case, anything destined for S3 – is always routed through the internal AWS network.

[ec2-user@ip-192-168-2-134 ~]$ ip route get 54.231.1.160 54.231.1.160 via 192.168.2.1 dev eth0 src 192.168.2.134

Interestingly, you can retrieve the CIDR range of the service using the “describe-prefix-lists” command. In this case, it was revealed to be 54.231.0.0/17. Presumably, if I had a more specific entry in my own route table, I could still coerce traffic to flow through a NAT instance or a VPC gateway. Of course, I can’t imagine why anyone would want to do that.

Treys-MacBook-Pro:~ treyperry$ aws ec2 describe-prefix-lists

{

"PrefixLists": [

{

"PrefixListName": "com.amazonaws.us-east-1.s3",

"Cidrs": [

"54.231.0.0/17"

],

"PrefixListId": "pl-63a5400a"

}

]

}

By delivering this long-awaited feature and making VPC endpoints trivial to integrate, Amazon Web Services has made it significantly easier to maintain infrastructure within their cloud environment.

Article Image Credit: “Tech Dots” by Kristine Full. Licensed under CC BY 2.0 via Flickr.